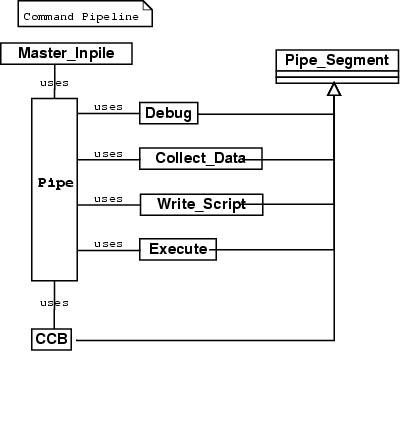

Command Pipeline

The Command Pipeline provides a highly flexible execution of Commands.

Motivation

The main purpose of the Command Pipeline is to move the complexity of executing a Command (see Command, the Section called Command) from the code to the class structure. This provides flexibility, since it is possible to change the process of executing a Command by simply adding new objects and cling them to the Command Pipeline.

Mechanics

Note: In this diagramm the order of the Pipe_Segment subclasses implies the order of execution, if not disclaimed in the description of the segment.

During initalization of OpenCAGE different pipe segment objects enrol to the Pipe in a particular order. When a Command object is given to the Pipe, the Pipe passes it successively to the pipe segment objects, each performing one step of the execution process. It is possible for Command objects to create new Command objects which will be executed by the Pipe.

A major concern is the concurrent execution of a lasting command and of the GUI. There are three alternatives of solving this problem:

Each of the GUI and the Command Pipeline run in a different task. They communicate via shared objects.

Pros:

flexibility

independency of the two processes

Cons:

The Command Pipeline has to access parts of the GUI in order to open the dialogs and prompt the user. This alternative entails a high risk of failure due to technical problems of threading with GtkAda.

The GtkAda main loop is the only task that has to access the GUI. Idle processes in the GtkAda main loop run the Command Pipeline whenever they occur.

Pros:

still providing little flexibility

no trouble with threading with GtkAda

cons:

not as flexible as the first alternative

GUI is blocked during the execution of one Command.

The Command Pipeline is called in the event task that was started by the GUI. Only after the Command Pipeline is finished, the Gtk main loop starts running again.

Pros:

easy to realize

cons:

If a Command creates new Command objects and puts them into the Command Pipeline, the GUI is blocked until all of the Commands are executed.

The second alternative is going to be implemented as a trade-off between technical risk and flexibility.

The Parts of the Command Pipeline

- Master Inpile (MIP)

The Master Inpile (MIP) is a FIFO (the Section called FIFO in Chapter 10) that collects the Commands which are to be executed. Using a FIFO ensures that the Commands are executed in the order they were invoked.

Whenever the Command Pipeline is launched it looks in the Master Inpile (MIP) for new Command objects, takes the first one and pipes it through the Pipe Segments.

- Debug

The debugging segment is not necessarily located directly after the Master Inpile (MIP). It will be placed during the process of coding wherever it is necessary.

The debugging segment connects a listener to the Command object. In this way, debugging output can be provided during all of the following segments.

- Collect_Data

The Collect_Data segment asks the current Command to collect its data.

- Link_Script_Observer

The Link_Script_Observer links a Script_Observer to the current Command object. See also Scripting Output (the Section called Scripting Output).

- Execute

The Execute segment tells the Command to execute itself. If the Command is to be run in its own task, the task is started in this segment.

- Command Catchment Bassin (CCB)

When a Command is to be run in an own task, it falls out of the Execution segment into the Command Catchment Bassin (CCB). That means the Command Catchment Bassin (CCB) enrols to the Command and holds a list of all Commands currently running in their own tasks. Whenever a Command is finished, it notifies its Observers with the destruction message. Then the Command Catchment Bassin (CCB) destroys the Command.